CeLLM = Cell + LLM: Automate your spreadsheet workflows

Suvansh Sanjeev • 2023-06-08

Give your spreadsheets the gift of AI with CeLLM: Unlock powerful insights with a mouse drag.

Note: Find the code and instructions for CeLLM here.

We're excited to introduce CeLLM, a way to integrate large language models (LLMs), like the ones behind ChatGPT, into your spreadsheets, changing the way you interact with them.

In the era of data-driven decision making, we often find ourselves working with large volumes of data, especially in the form of spreadsheets. Analyzing these data can sometimes be overwhelming and time-consuming. Wouldn't it be incredible if you could just ask your spreadsheet questions and receive intelligible answers? This is where CeLLM comes in.

CeLLM is a Google Sheets add-on that allows you to leverage the power of state-of-the-art LLMs, such as OpenAI's GPT-3.5 and GPT-4, and Anthropic’s Claude (including the 100k token context window model), right in your spreadsheets. It transforms your static spreadsheets into a dynamic conversational partner that understands your queries, understands examples, and provides detailed responses, making data analysis more accessible and interactive. Your data is not sent anywhere other than to the LLM provider you select.

What does CeLLM do?

CeLLM simplifies your data analysis process and makes it interactive, fun, and more insightful. By leveraging LLMs to analyze and respond to queries, you can save significant time and effort on routine data tasks and instead focus on making informed decisions.

CeLLM works by taking advantage of GPT-3.5/4 and Claude, LLMs provided by OpenAI and Anthropic. You can call CeLLM in your spreadsheet as a function, just like SUM or AVERAGE. For example, you can use it in the following ways:

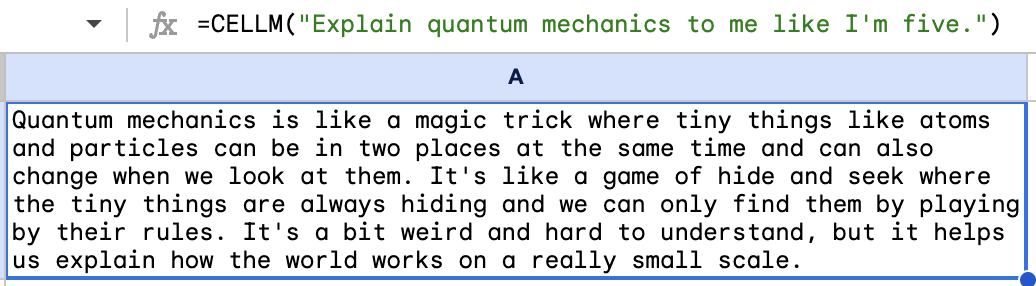

- As you would any LLM: The simplest usage is to produce responses to prompts:

CELLM("Explain quantum mechanics to me like I'm five.") - To apply a prompt in many contexts at once:

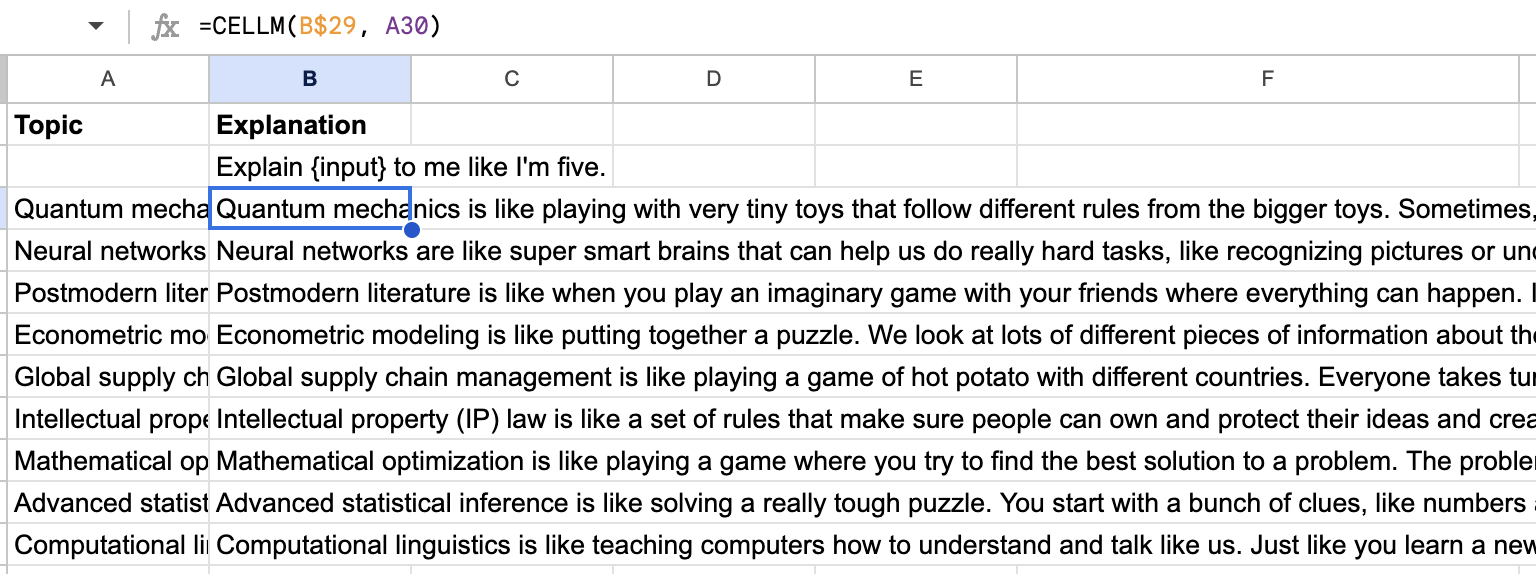

CELLM("Explain {input} to me like I'm five.", C1). Once you start to use spreadsheet cells to fill in prompts, the power of CeLLM becomes clearer. Imagine columnCcontains a list of topics you’ve been wondering about. By simply dragging this formula down to the length of the list, you can produce simple explanations of all these topics in seconds. - To finish a task you’ve started:

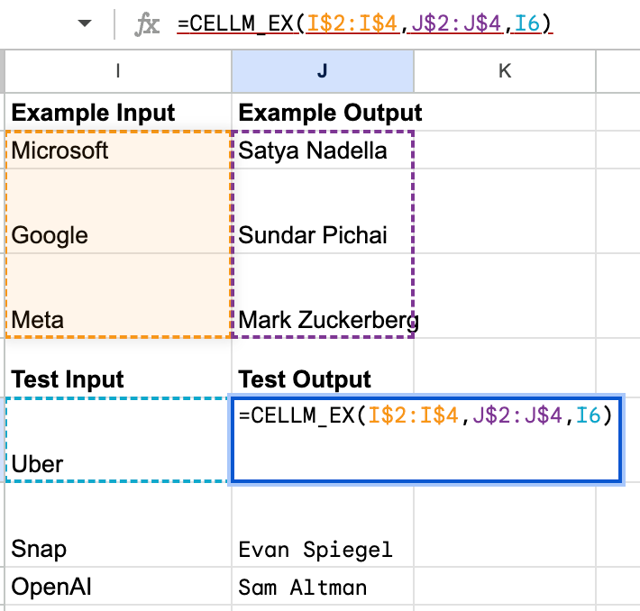

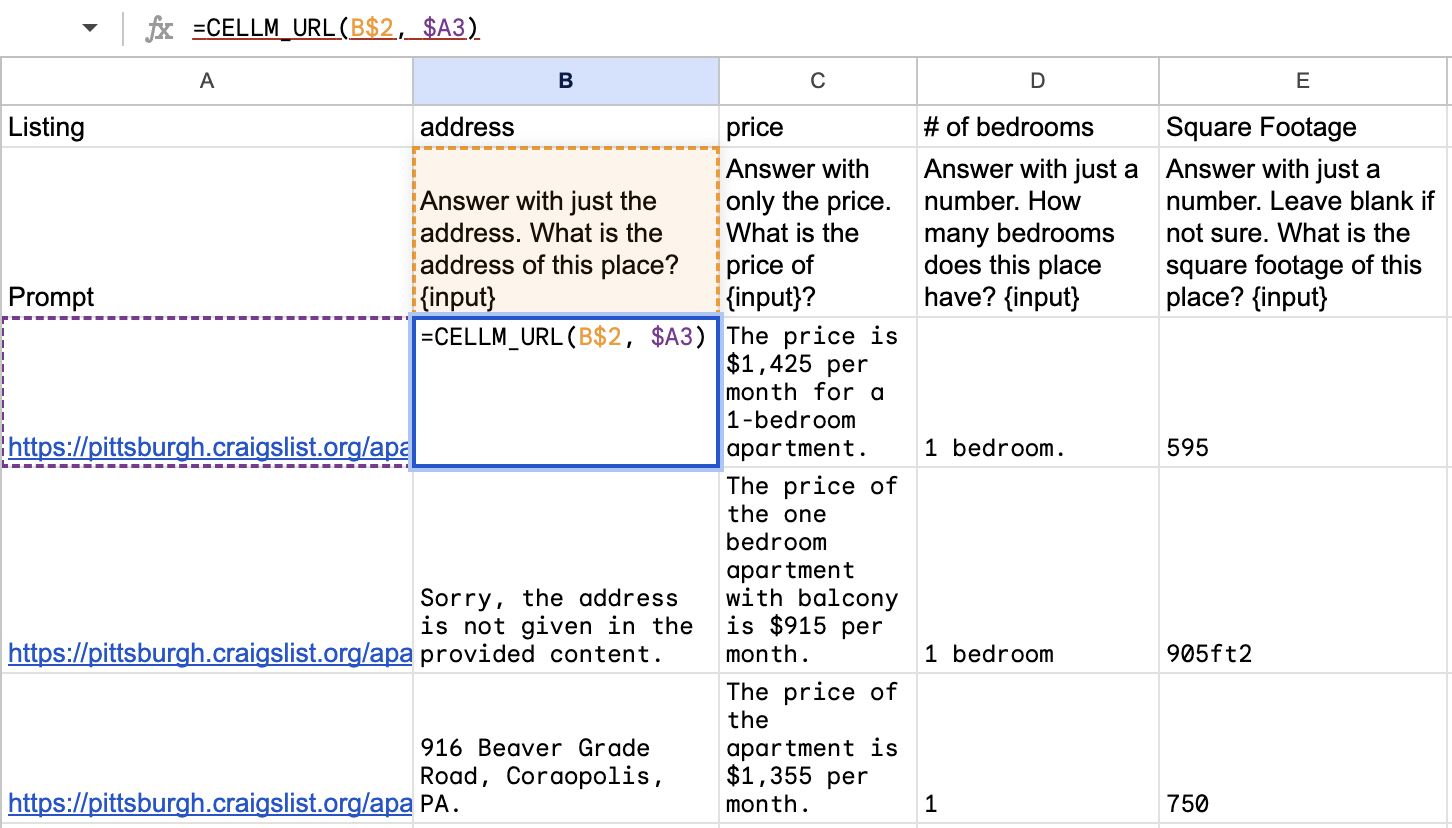

CELLM_EX(A1:A5, B1:B5, A6). In this case,A1:A5represent example inputs for which you have the corresponding outputs inB1:B5. You’re interested in automating the completion of the task in the rest of the column, so you write this formula inB6and drag it down as far as the length of the inputs in columnA. Once again, in a few seconds, your task is done. Of course, be sure to review the LLM’s work. - To extract structured information from a collection of webpages:

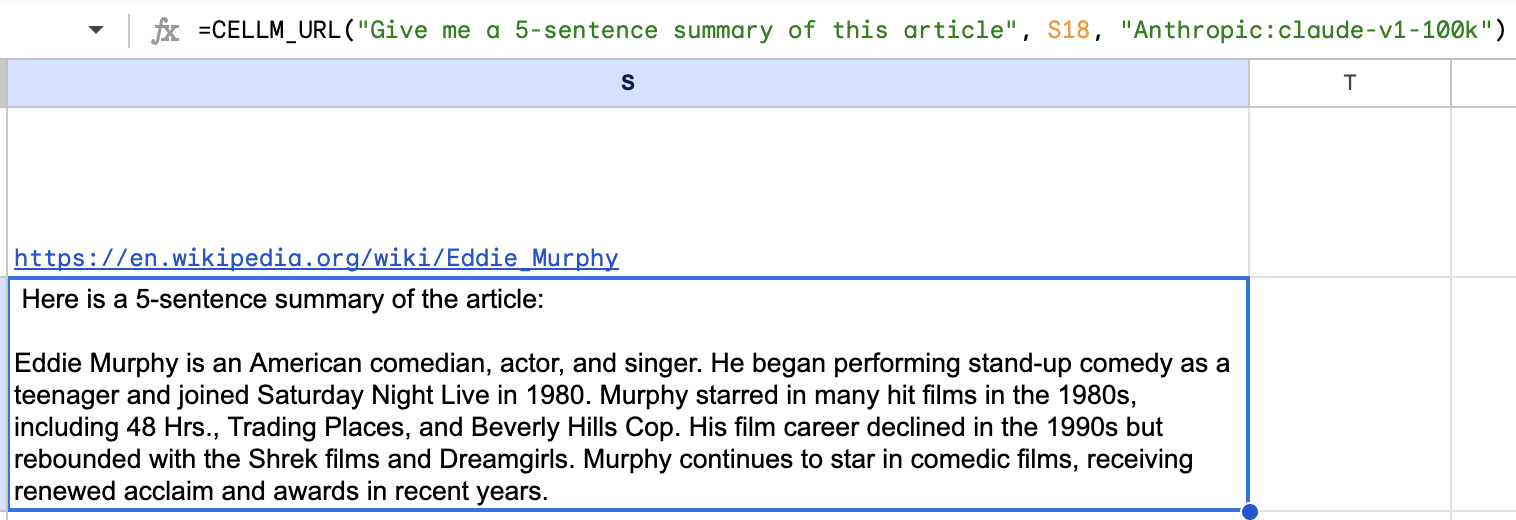

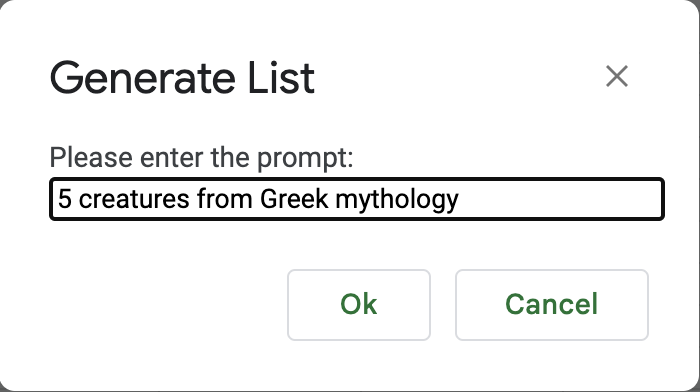

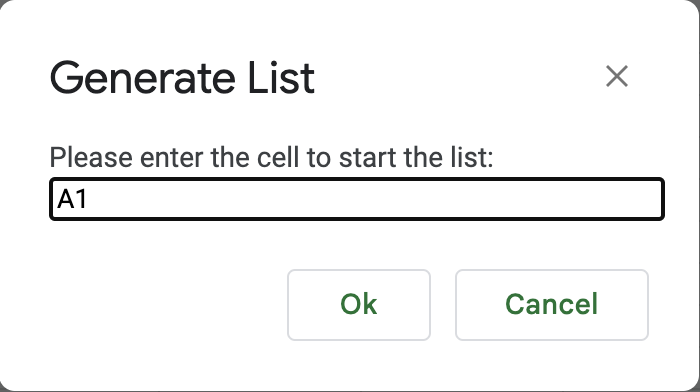

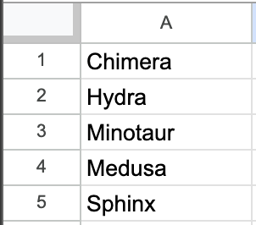

CELLM_URL("Give me a 5-sentence summary of this movie", "https://en.wikipedia.org/wiki/About_Time_(2013_film)", "Anthropic:claude-v1-100k"). Of course, the URLs can be specified by spreadsheet cell references, and a simple drag lets you extract information from a series of URLs at once. Note that this feature can eat up tokens quickly for large webpages, and is prone to timing out (Google limits the time a Sheets function can take to complete). - To generate lists across spreadsheet cells. In the CeLLM menu that appears upon completing installation of the add-on, the Generate List option allows users to specify a list of items (and optional quantity) that they would like to generate, and a location in the spreadsheet for the list to begin. The LLM-generated list is then used to populate the cells at and below the specified one.

First input: prompt describing list

Second input: cell starting the list

Output

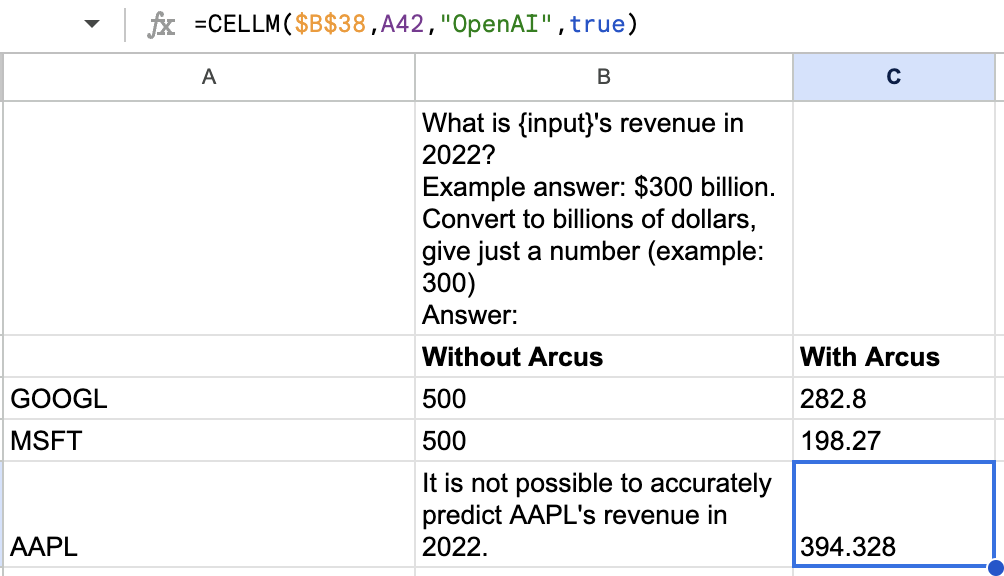

Arcus Prompt Enrichment

Brilliantly has partnered with Arcus to integrate Prompt Enrichment into CeLLM. When enabled, this feature allows CeLLM to pull in relevant context to answer questions more accurately and reduce hallucinations (cases where the LLM makes up an answer). See the difference Arcus can make below. Currently, Arcus is supported only in the CELLM function. They’re rapidly rolling out features and supported domains, so stay tuned at their Twitter @ArcusHQ!

Get started

Getting started with CeLLM is simple. Clone the GitHub repository Follow the instructions in the README to get set up with CeLLM in your own Google Sheets. Remember to add your API keys in the CeLLM → Settings menu.

If you’re looking for ideas to get started, you can use CeLLM to compare the effects of using different models, temperature settings, and prompts, right within your spreadsheet. See if there are tasks you can specify more easily with examples than prompts. Use a formula drag to explain quantum mechanics at different age levels of difficulty.

We're eager to hear about your experiences with CeLLM and see how it enhances your data interaction. Reach out at [email protected] or @BrilliantlyAI on Twitter. Happy spreadsheeting!

Documentation

Note that the spreadsheet functions (starting with CELLM) must include arguments in order. That means if you want to specify the temperature, you must specify all preceding arguments as well. This is due to lack of support in JavaScript for keyword arguments.

Generate List

This functionality is useful for using LLMs to generate lists of items across multiple cells. Due to access limitations, this functionality can't be accomplished through spreadsheet functions, so it's tucked away in CeLLM → Generate List.

CELLM

This is the spreadsheet function used to apply a prompt to a cell. The function is called as follows:

CELLM(prompt, input, llm, arcus, max_tokens, temperature)

The first two arguments are required, and the rest are optional.

prompt(string): The prompt to apply to the input. Any occurrences of the string "{input}" in the prompt will be replaced with the value of theinputparameter. If no occurrences are found, the input will be appended to the end of the prompt instead.input(string): The value to be combined with the prompt as described above.llm(optional, string): One of "OpenAI" (default) or "Anthropic", optionally including a colon-separated model name selected from "gpt-3.5-turbo" (default) or "gpt-4" (if your API key has access) for OpenAI, or the model options found here (default "claude-instant-v1") for Anthropic. For example, "OpenAI:gpt-4" uses OpenAI's GPT-4 model, and "Anthropic:claude-v1-100k" uses Anthropic's Claude v1 model that accepts a 100k token context window.arcus(optional, boolean): Whether or not to use Arcus' prompt enrichment to add in relevant data to the prompt when available. Requires Arcus API key to be set in CeLLM → Settings. Defaultfalse.max_tokens(integer): The maximum number of tokens allowed for the LLM response. Defaults to 250 to prevent rapid cost increases (OpenAI and Anthropic charge by the token).temperature(float): A value between 0-2 (OpenAI) or 0-1 (Anthropic). Per the OpenAI documentation, higher values will make the output more random, while lower values will make it more focused and deterministic. Defaults to 0.3.

CELLM_EX

This is the spreadsheet function used to generate outputs using example input-output pairs, rather than a prompt, to specify the task. The function is called as follows:

CELLM_EX(exampleInputs, exampleOutputs, testInput, llm, arcus, max_tokens, temperature)

The first three arguments are required, and the rest are optional.

exampleInputs(cell range): The inputs for the example pairs. For example, A1:A5.exampleOutputs(cell range): The outputs for the example pairs.testInput(string): The input to produce an output for, based on the example input-output pairs.

The rest of the arguments are as described for CELLM, but arcus is ignored and set to false for now.

CELLM_URL

This is the (in-development) spreadsheet function used to apply a prompt to a webpage. It can be used to extract information from a webpage with an LLM. The function is called as follows:

CELLM_URL(prompt, url, llm, arcus, max_tokens, temperature)

The first two arguments are required, and the rest are optional.

prompt(string): The prompt to apply to the input. Any occurrences of the string "{input}" in the prompt will be replaced with the content of the webpage fetched from theurlparameter. If no occurrences are found, the webpage content will be appended to the end of the prompt instead.url(string): The content of the<body>tag in the HTML fetched from this URL is stripped of HTML tags and used as input to the prompt. The rest of the arguments are as described forCELLM, butarcusis ignored and set tofalsefor now.

NOTE:

- Due to the token-based pricing, this function can run up costs pretty quickly.

- Long webpages can result in hitting the LLM max token limit.

- Specifying one of Anthropic's 100k token models (such as with

llm="Anthropic:claude-v1-100k"orllm="Anthropic:claude-instant-v1-100k") allows CeLLM to process much longer webpages. Be mindful of running up costs with large webpages though.

See More Posts

brilliantly Blog

Stay connected and hear about what we're up to!